Project 2: Fun with Filters and Frequencies!

-Boyu Zhu

Intro

In this project, we explore filters and frequencies through a series of fun experiments. These include creating hybrid images and blendings. And welcome to my portfolio!

Part 1: Fun with Filters

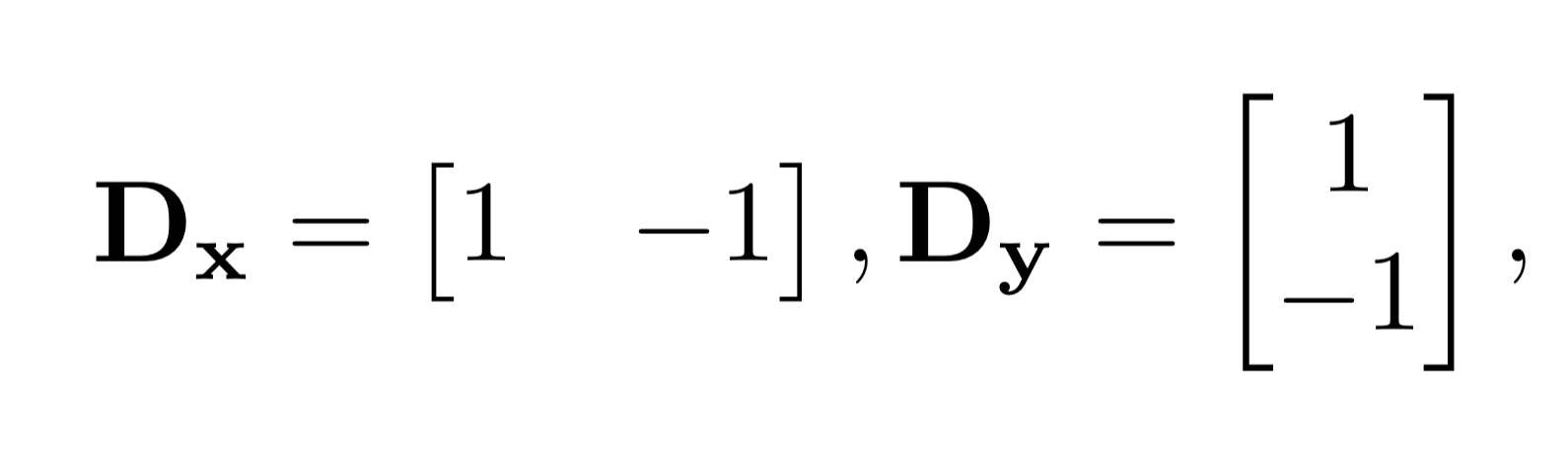

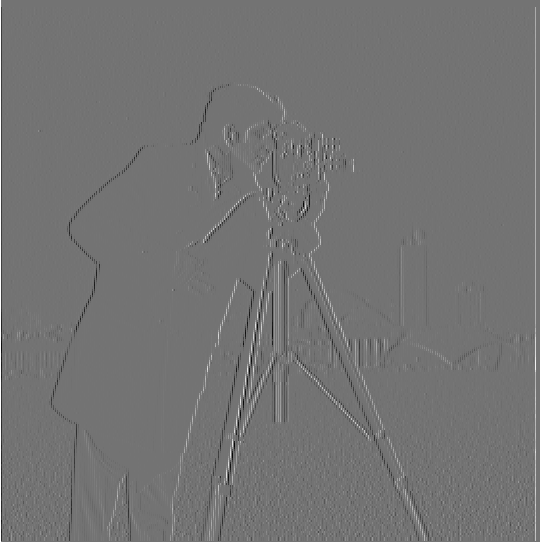

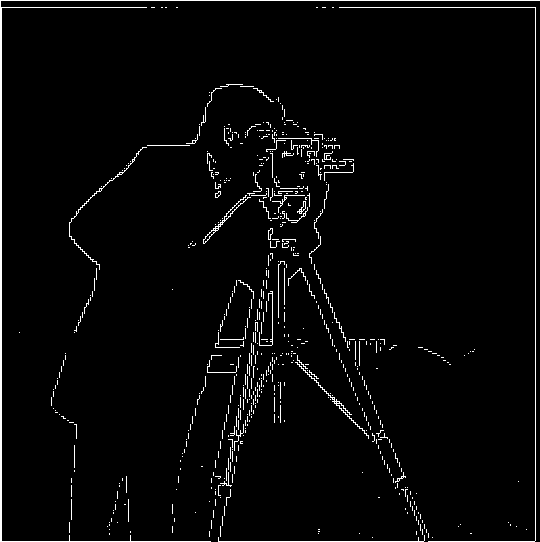

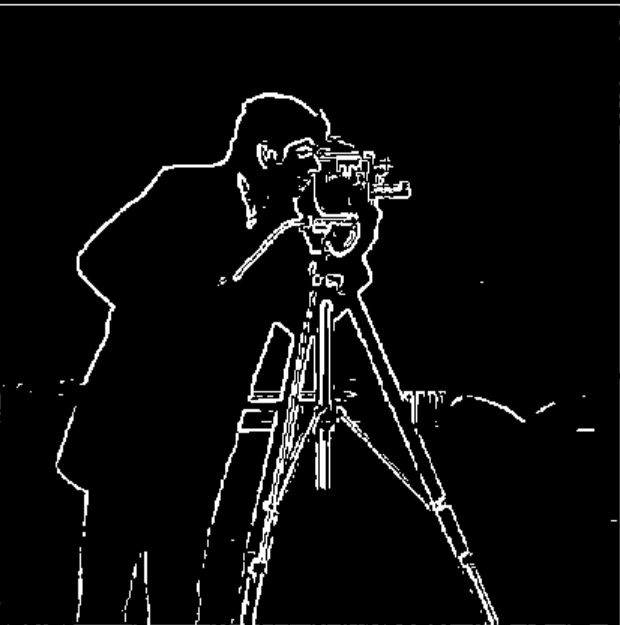

Part 1.1: Finite Difference Operator

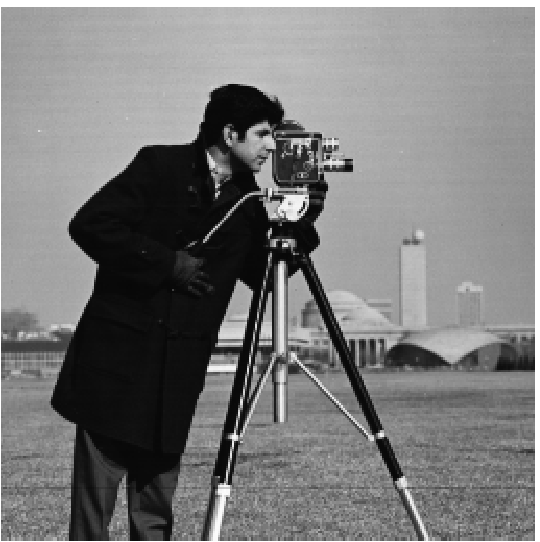

First, we begin by applying finite difference filters to compute the gradients of the image in both the horizontal (x) and vertical (y) directions independently.

Then we proceed to calculate the gradient magnitude at each pixel. This is done by applying the formula: take the square of both the x and y gradients, sum these squared values, and then compute the square root of the result. This gives us the magnitude of the gradient at each pixel.

original image

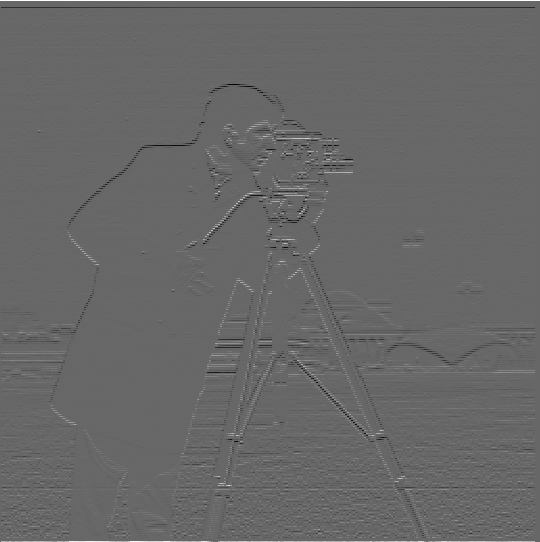

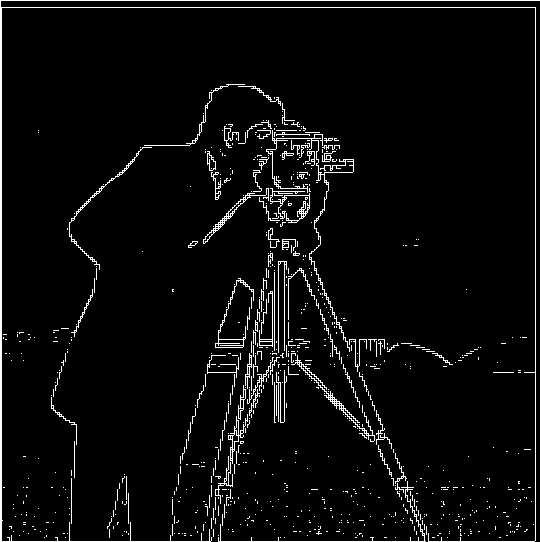

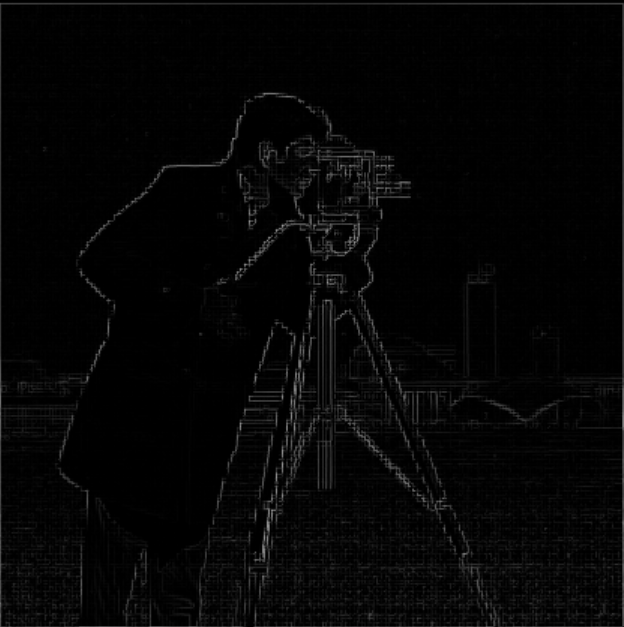

gradient magnitude

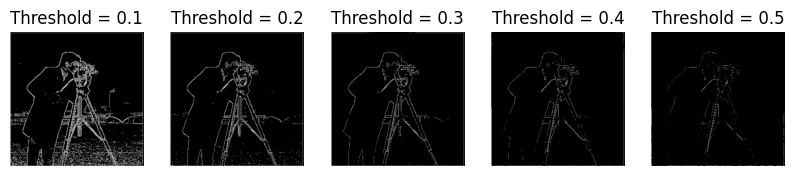

picking the appropriate threshold

Through observation, I believe that the image with a threshold of 0.2 strikes a balance between detail richness and noisy level.

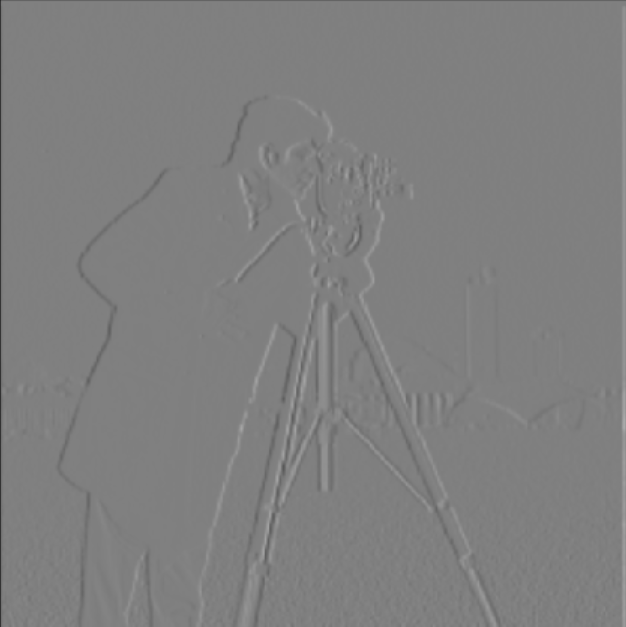

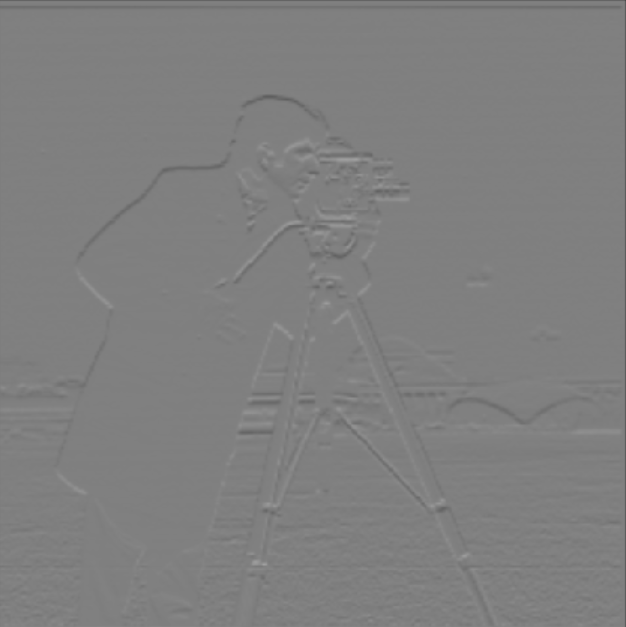

Part 1.2: Derivative of Gaussian (DoG) Filter

use a Gaussian filterto blur the original image

blurred image

What differences do you see?

Compared to the gradient magnitude image that has not undergone Gaussian blurring, the gradient image after blurring shows fewer noise artifacts after binarization, while the edges are more clear and thick.

With a single convolution

We first compute the gradient of the Gaussian kernel, and then use the new Gaussian kernel to obtain the blurred images in the x and y directions.

the result stay the same with the one above

Part 2: Fun with Frequencies!

Part 2.1: Image "Sharpening"

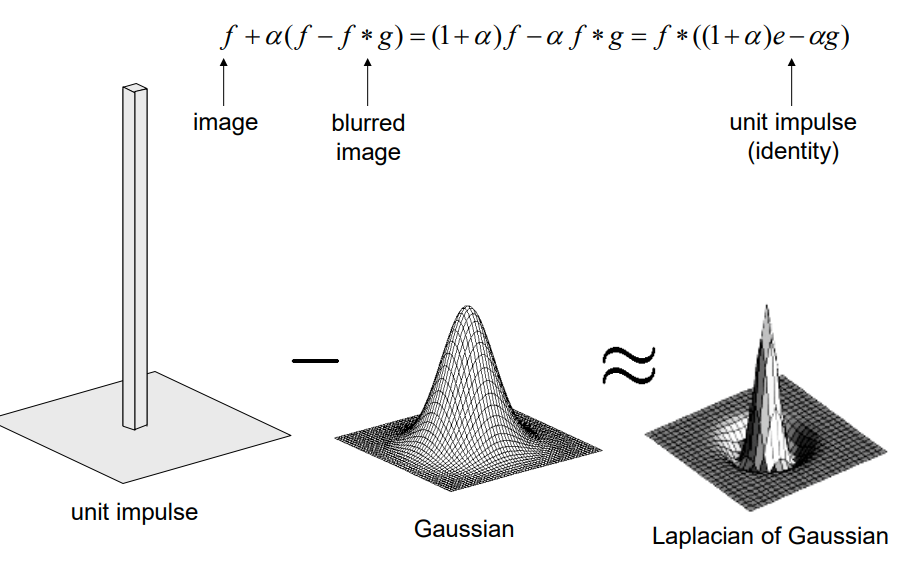

Image sharpening is achieved by adding the original image to the high-frequency components.

sharpened = origin+ alpha * high_frequency

also, we can accomplish this by apply single filter:

evaluation

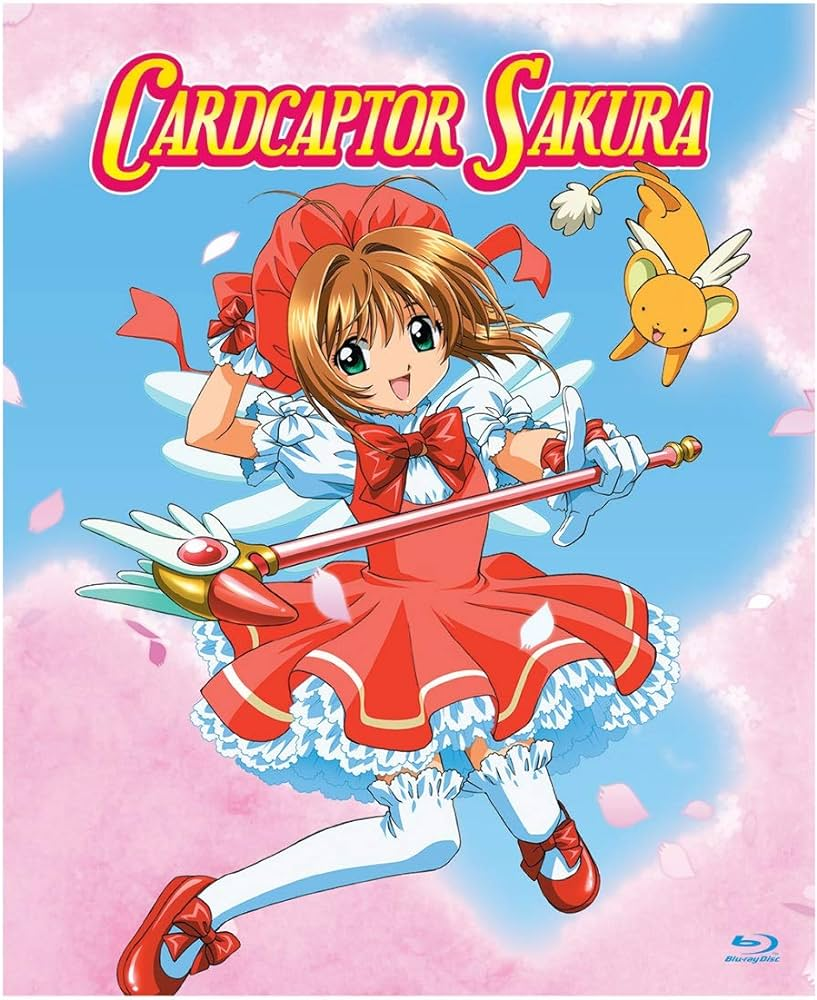

cardcaptor sakura

Compared to the blurred image, a sharpened image restores some of the lost details, but it cannot recover all the fine details. The colors in a sharpened image tend to appear more vibrant.

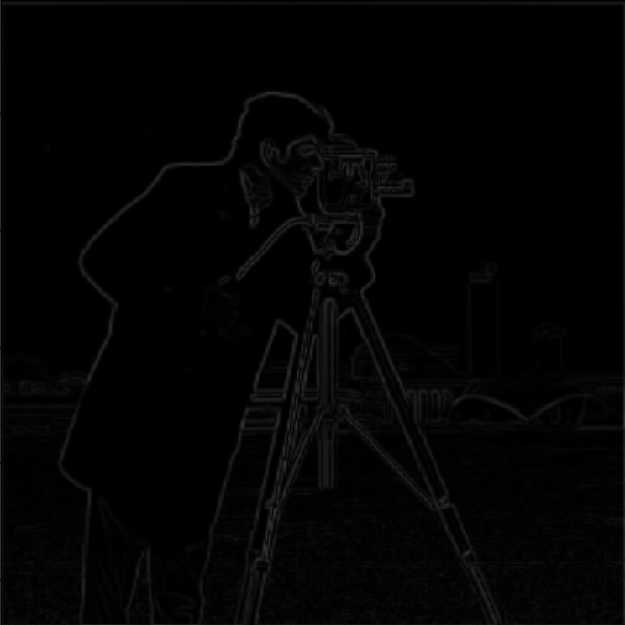

Part 2.2: Hybrid Images

In this part, we use two image to create a single image that can be interpreted differently at various viewing distances. One image is used for high-frequency content and the other for the low-frequency content.

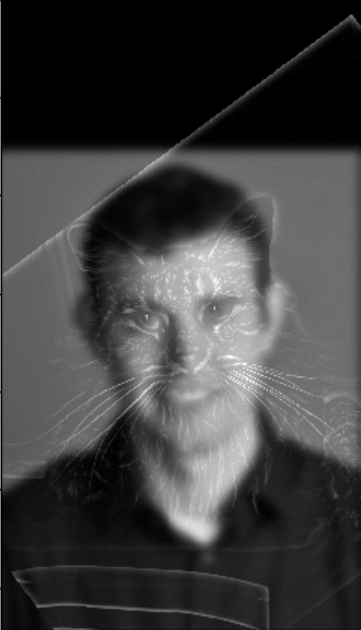

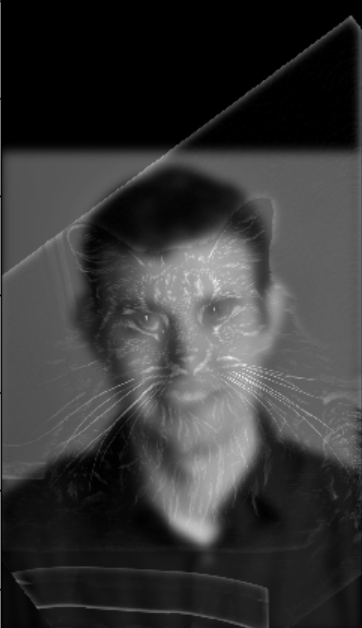

Failed example

In this case, the hybration doesn’t work very well from away. Although I tried several sigma and kernal size combination, the images seem to work not very well from eye debugging.

My assumption is that:

- the hybration need some degree of fitting of shapes. In this case, the angles of the head of the man seem to differ, and therefore it’s a little bit aligning two images.

- second is that I guess human eyes are sensitive to shape of human faces, therefore, we can still sense the existence of high-frequency human face.

comparison of use of color for hybrid images

Generally speaking, the low-frequency image plays a dominant role in determining the overall color composition of the hybrid image. However, the high-frequency image also influences the final result by adding fine details and sharp edges, which enhance the perceived quality of the image.

Therefore, using color for both the low-frequency and high-frequency images results in a higher quality of hybrid image.

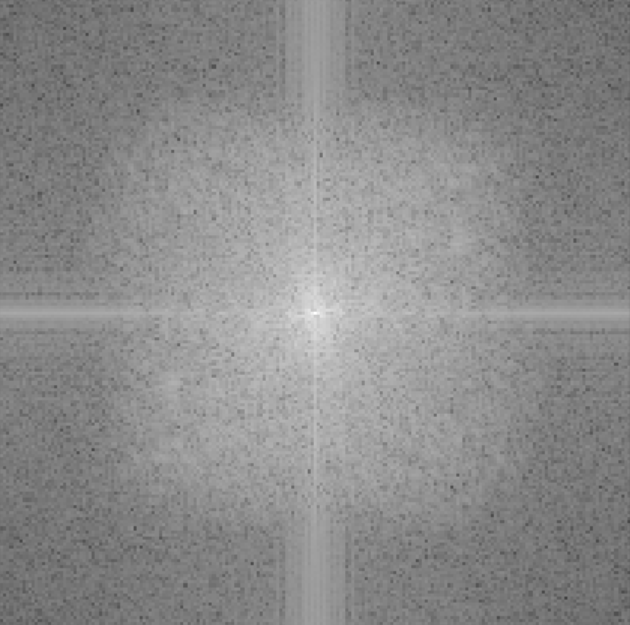

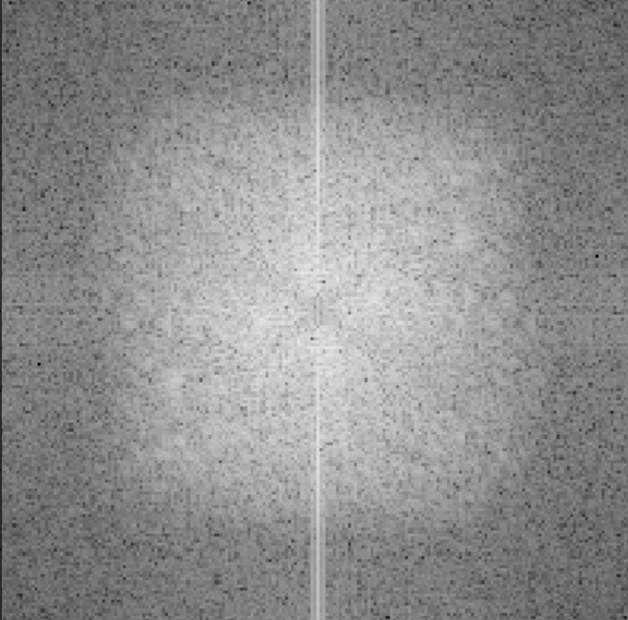

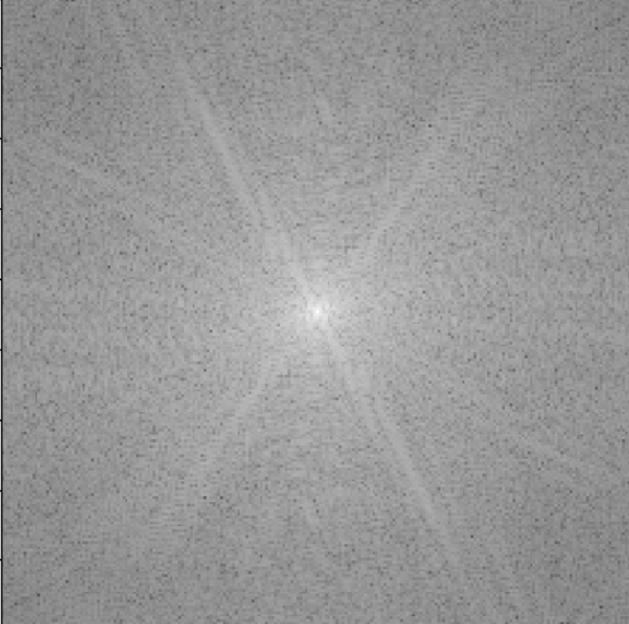

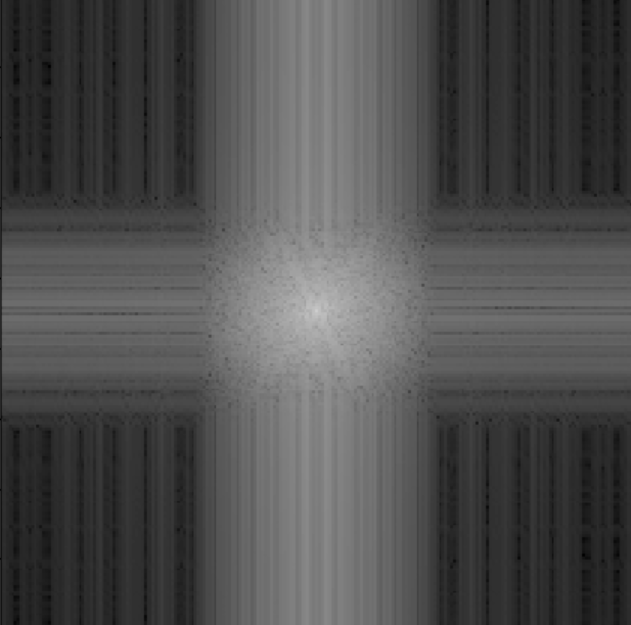

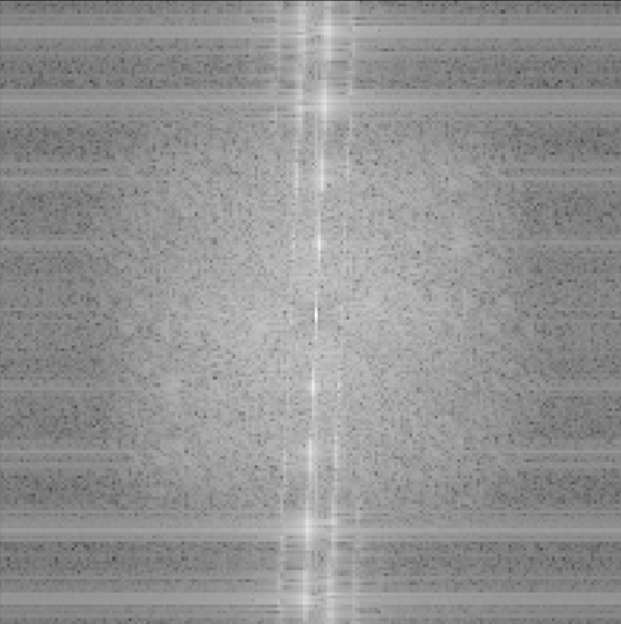

frequency analysis

for the hulk and shrek mix, I used Fourier transformation to analyze the frequency of original and filtered images.

hybrid

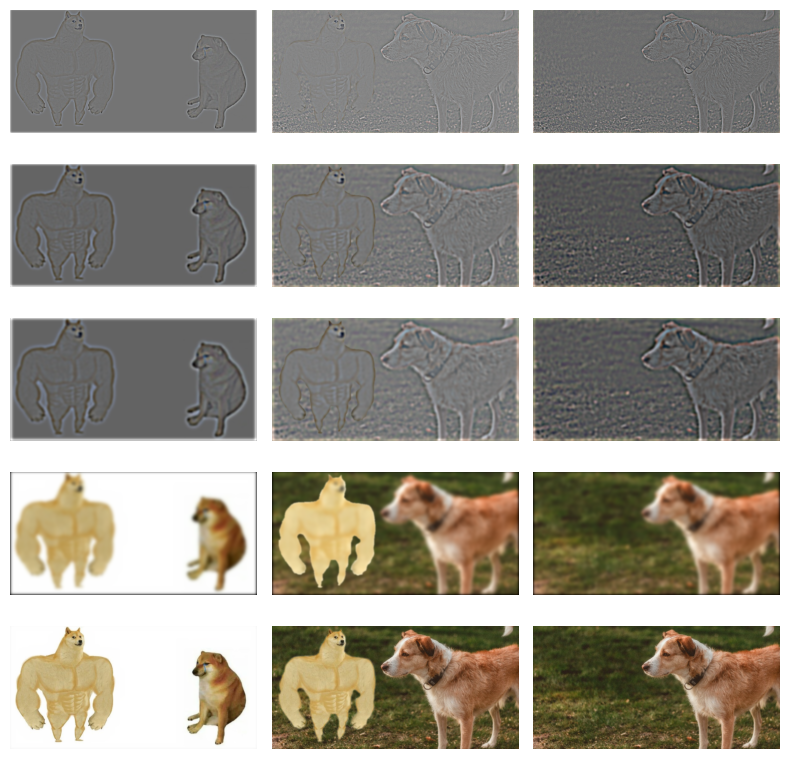

Part 2.3: Gaussian and Laplacian Stacks

mask

a cute dog

buff dog

hybrid image

mask

shark

diving

hybrid images

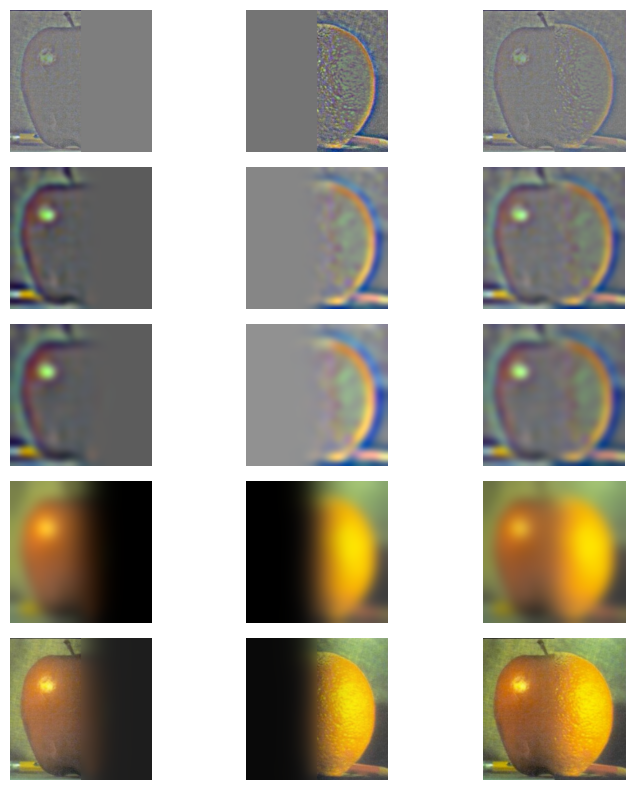

the Laplacian stacks of blending process of buff dog

Conclusion

This project is incredibly fun and interesting. I feel like I'm playing with every pixel. It's the most "intuitive" project I've ever worked on, and it feels like I'm step by step recreating the image transformation features in my phone's photo gallery.